January 9, 2026

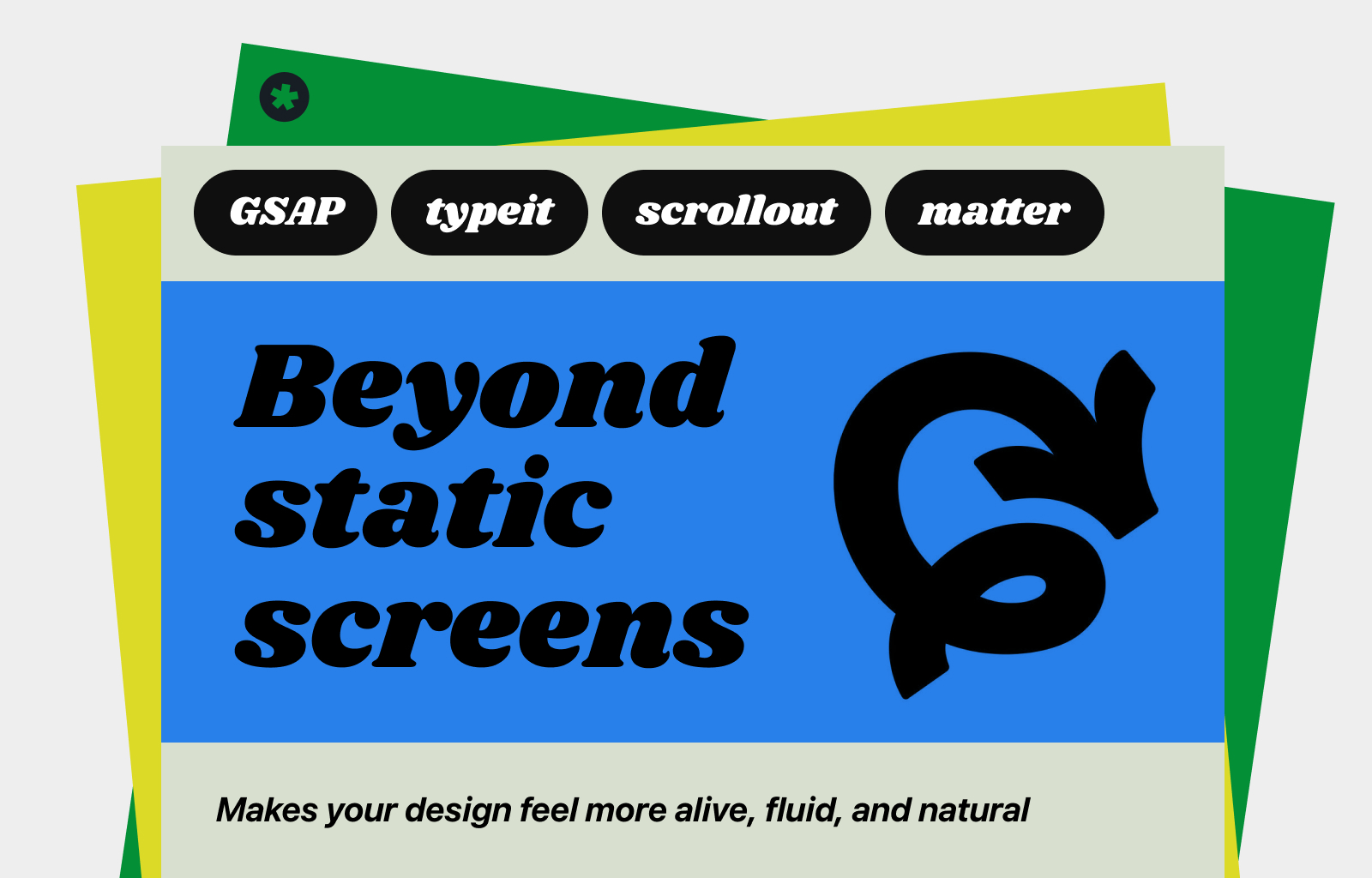

Beyond static screens

Makes your design feel more alive, fluid, and natural

Eugene,

UX/UI Designer

Adding interaction to a static screen is not just about making elements move—it’s about bringing life, flow, and a sense of natural response into your design. Thoughtfully designed interactions react to user input, create rhythm, and help users intuitively understand what’s happening on the screen. In this article, I’ll introduce JavaScript libraries you can apply directly to your own designs, along with practical examples and code you can use right away.

Along the way, I’ll reference examples from the official documentation and pair them with my own implementations. The code shared here includes both officially provided references and my own interpretations—researched, adapted, and implemented to translate those ideas into a practical web context.

GSAP (GreenSock Animation Platform)

A wildly robust JavaScript animation library built for professionals

GSAP is a high-performance JavaScript animation library designed for creating precise and expressive motion on the web. It goes beyond simple visual effects, offering fine-grained control over timing, easing, and sequencing—making it ideal for interaction design where motion needs to respond naturally to user input and system states.

What sets GSAP apart is its reliability and flexibility in complex scenarios such as scroll-based interactions, chained animations, and dynamic UI feedback. With tools like timelines, designers and developers can structure motion as a clear, intentional flow, ensuring animations feel consistent, purposeful, and deeply integrated into the overall user experience.

How to use

1

Load the script

Load the script with a script tag (a CDN link is available)

<script src="https://cdnjs.cloudflare.com/ajax/libs/gsap/3.12.2/gsap.min.js"></script>

Or, run 'npm install matter-js' and import the module

npm install gsap

2

The basic effect

To add effect to type, svg or anything you want, follow the basic grammar at the below.

gsap.from("#titleName", {

delay: 0.5,

y: 20,

opacity: 1,

ease: "expo.inOut"

});

// gsap.from is used to animate an element from a specified starting state to its current state.

gsap.to("#titleName", {

delay: 0.5,

y: 20,

opacity: 1,

ease: "expo.inOut"

});

// gsap.to is used to animate an element from its current state to a specified end state.

The expressive motion scheme overshoots the final values to add bounce

Expressive is Material’s opinionated motion scheme, and should be used for most situations, particularly hero moments and key interactions.

The standard motion scheme eases into the final values